Originally published on 4 January 2025

Designing location-based AR experiences requires positioning virtual objects with extreme accuracy, because being even 1 degree off can cause drift that breaks player immersion.

MeshMap's go-to solution has been to combine professional LiDAR scans with AprilTag fiducial markers and a simple calibration tool. With this combo we have created and operated AR game tours that span several acres!

First, let’s take a step back.

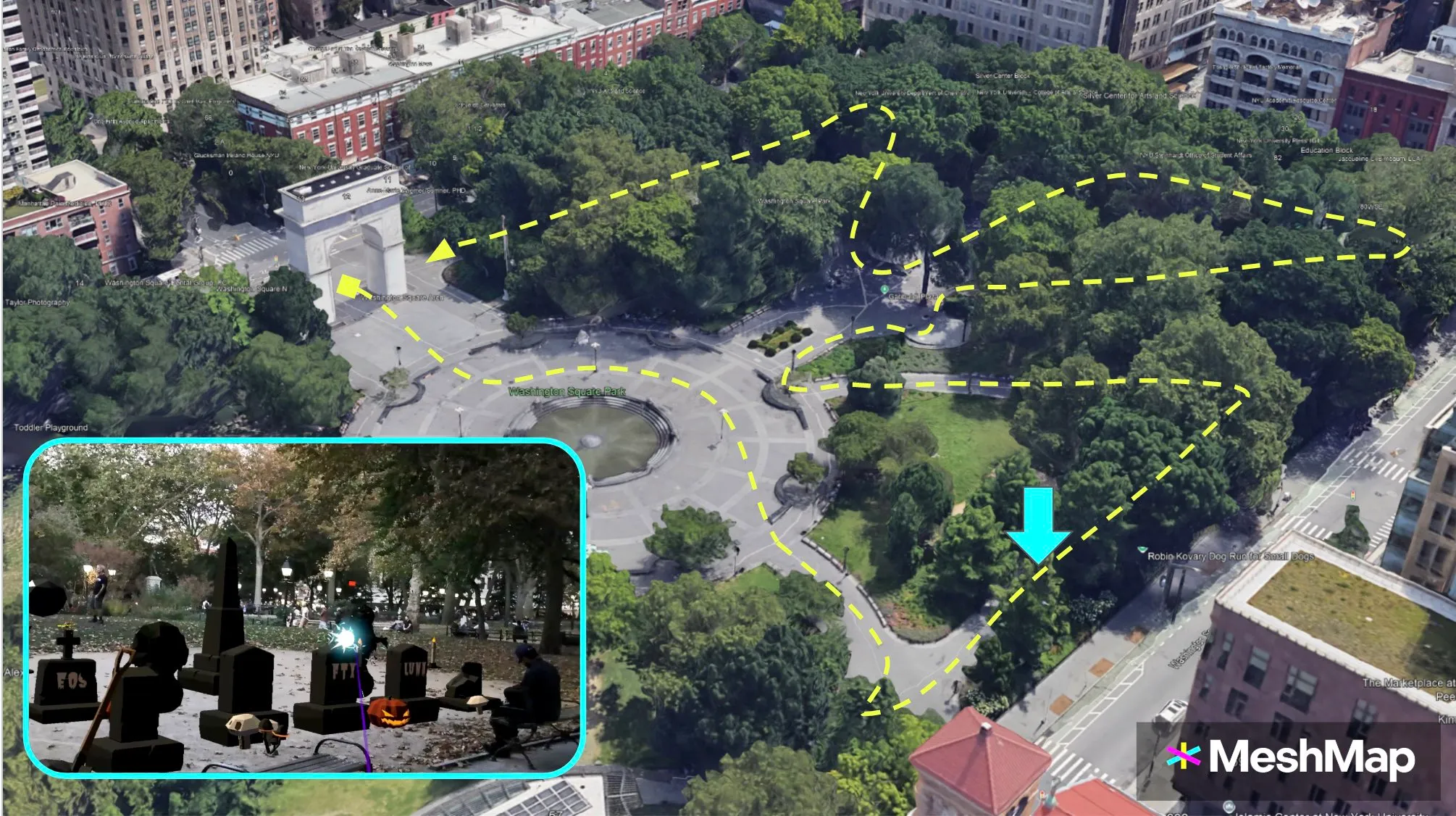

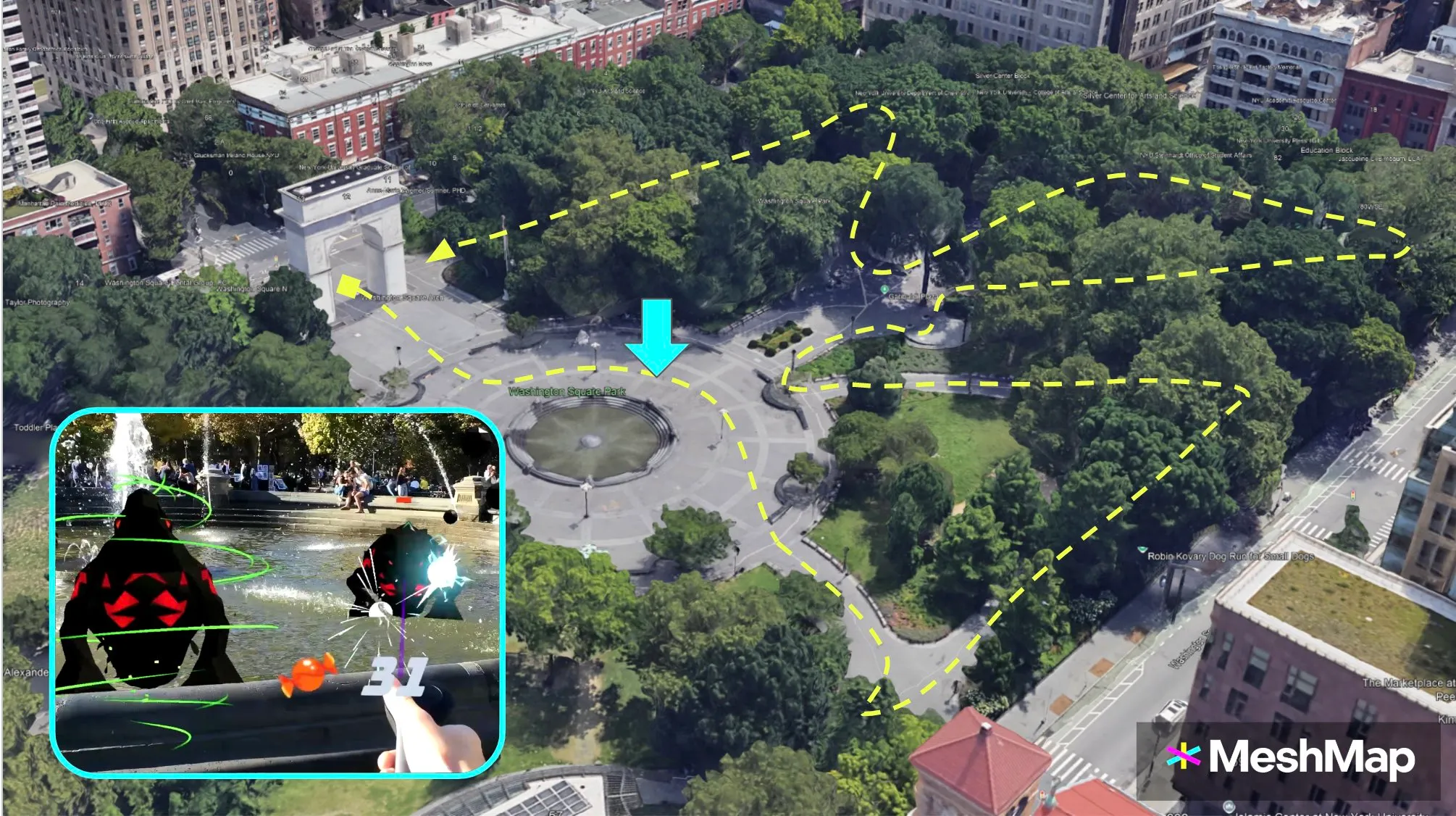

This is the map of our Halloween 2024 event in New York City. We organized this demo event to cap off our time in the a16zcrypto startup accelerator program (CSX).

The path was almost a half-mile long (700m), winding along the paths of Washington Square Park and covering over 4.6 acres (18,700 sq m).

We guided groups of 5-10 participants at a time all wearing Magic Leap 2 headsets along this 30-minute game tour.

The game included a scavenger hunt for hidden candies, blasting AI ghouls, interactive minigames, and virtual decorations all over the park. Here are some of their locations:

As the developer, my challenge was to consistently have the AR content positioned in the correct spots as participants moved through the entire park.

First, we experimented with using VPS-based (visual positioning systems) localization methods from industry leaders, but were not satisfied with their performance.

In our experience, VPS can work well enough to position a couple objects in easily identifiable areas, but it remains very inaccurate in places with less identifiable features, like seasonal foliage, passersby, and temporary furniture, as well as over long distances.

For example, we tried two different VPS originally designed for smartphone AR and both positioned our content several meters away from the expected locations.

At this time, we also hadn't yet started using the fiducial markers for localization.

Our first tours used just our 3D LiDAR scans from the Leica BLK2Go and an easy-to-find starting point directly in front of the arch.

Thanks to having a clear starting point for the experience, we could guarantee that every player’s Magic Leap 2 headset localized in roughly the correct starting position.

This meant that Unity's world coordinate space would be roughly aligned with the real world.

However, there was still the possibility that rotational error could mess things up.

For example, if the headset was turned 1 degree to the left at launch, then the position of every object would be skewed slightly to the left. This skew would increase the further away one went from the localization spot (in this case, the tour's starting point).

The furthest object I placed was 155 meters away. At 1 degree of accuracy, that object would be off by 2.62 meters. At 5 degrees, this error increases to 13.1 meters.

The formula to calculate the error distance as you move further away is E = 2rsin(angle/2)

Given this potential for error, I was impressed that in most cases the content was all within 1 to 2 meters of accuracy during our tours, way outperforming the VPS we had initially tried.

The game tour was a lot of fun, but we wanted a more reliable localization method for our next event.

I began by adding fiducial marker tracking to our localization methods.

In practice, this involves placing an AprilTag marker on the ground at the starting point of the tour and using the headset's cameras to detect where the marker is.

Once the marker's position and rotation are detected, all of the virtual content is aligned to it. This ensures we always have the correct and identical starting position across all devices.

Our marker tracking method also included options to "freeze" the global x and z rotations of our objects. This reduced the potential of rotational error along those axes.

However, rotational error can persist for several reasons, including incorrect marker placement, the headset's SLAM losing tracking, camera depth perception inconsistency in different lighting conditions, and user error.

Operating the tour at the same time of day and providing clear instructions to participants would help mitigate these sources of error.

We hosted our Christmas game tour in a part of southeast Central Park that was a smaller total area than Washington Square Park (*just* 7,300 sq m, or 1.81 acres), but with a 43% longer max distance of 215 meters.

The longer distance made solving localization a very high priority.

At 1 degree of accuracy, the furthest object would be off by 3.75 meters. At 5 degrees, this error increases to 18.75 meters.

So if the AprilTag was turned in slightly the wrong direction when placed on the ground, then the candy canes, Christmas trees, and snowmen would far away.

I could add more markers along the path, but each would still be susceptible to these same sources of error, albeit over shorter distances.

Creating a manual calibration tool was the unlock we needed.

Participants could easily tweak the placement of content as they explored the route by using a UI to adjust the rotation offset.

If content was floating in the air or drifting to either side, simply pressing the corresponding button a couple times would fix it!

Marker tracking + calibration enabled us to design large outdoor AR experiences where other methods, including visual positioning systems (VPS), had fallen short.

Check out the full Christmas recap video (7 minutes) if you want to see our location-based AR solutions in action!